The black art of platform conversions: The challenges of integrating and recreating engines and platforms

Sponsored article: Abstraction Games takes another deep-dive into the straightforward port process

This is the second part of our deep-dive into "the straightforward port," which is the initial step to any platform adaptation. Ironically, the process is not straightforward at all, such that we decided to split it into two parts to make it easier to read. You can refresh your memory on the first part on this page.

To pick up where we left off last time, the goal and deliverables of the straightforward port are simple: make sure the game runs on the target platforms 'as is', with no time spent on tailoring or optimization, apart from what is necessary to prove the game is running on the new platform.

In the last article, we covered the first two 'approaches' to get to the straightforward port:

- Switching platforms in an engine that already supports the target platform

- Adding a new platform to an existing engine

This time we'll be going over the remaining three approaches, which are:

- Integrating the game in an existing engine

- Reverse engineering or recreating an engine or framework

- Recreating an entire platform

Once again, we will try to illustrate our points by calling back to specific war stories from the Abstraction vault.

3. Integrating the game in an existing engine or Silverware

Not all titles we create adaptations for are built in modern engines. "Project Iron Bear" (code name) was such a game. It was built in a proprietary engine from 2001, entirely written in C, and had barely any platform abstraction layers since it only targeted the original Xbox at the time.

Adding one or more platforms to such an engine is a lot of work and any work you do for it is generally not reusable. To overcome this type of problem, we built our own in-house platform abstraction layer called Silverware. It consists of a vast set of libraries that abstract away all core systems (for rendering, saving, achievements, etc.) offered in game platform SDKs and runs on all current platforms. Therefore, if you port a game to Silverware once, a process we refer to as "normalization", the game effectively already runs on all supported platforms.

Let's look at this and other challenges faced when creating adaptations for older games in more detail by examining "Project Iron Bear" more closely:

- "Project Iron Bear" | Wouter van Dongen (lead programmer)

We always kick off Silverware based projects with a "normalization" pass during which we integrated Silverware into the already supported platform target and basically replaced all code that was platform-specific, or in other words, was built against platform SDK functionality. In Iron Bear's case, this was 32-bit Windows.

Normalization can be done system-by-system to ensure that everything keeps working correctly, but in some cases, a more fine-grained approach is required. For large systems such as rendering, for example, we replace pieces of graphics code graphics feature-by-feature.

When getting hands-on with older codebases, various surprises can surface. For instance, in Iron Bear, we ran into workarounds that had been implemented for the keyword "const" not being available when the game was developed. These outdated workarounds tripped modern compilers, so we had to disable them altogether.

Old, or just very platform-specific, tricks were being employed that no longer work on modern OS and consoles, as a lot of things that are relevant today were not a concern in the past. Patching is a great example of this, as it is a relatively recent way of doing things. Back in the day, when a game was released, it was final.

Iron Bear's cooked content was roughly 2GB but was different every time due to pointers, garbage memory, and the lack of deterministic order (sorting based on memory addresses).

The game was originally released as a 32-bit executable, and a lot of the game data went straight off the disk into memory, relying on the memory layout to be the same with each run.

However, on 64-bit, datatypes have different sizes, and since the assets we worked with were 32-bit, we could not simply load them into memory.

As mentioned earlier, memory is stored straight to disk without any conversions. So for this project, we decided to keep the source data as is and patch the data during loading. This allowed us to stay compatible with the original data and exchange it back and forth if necessary. One additional distinct advantage was that it also kept the 32-bit version alive during development for comparison.

- Booting the game

To patch the data, we had to identify the data definitions (type information of structs) with a different size in 64-bit and find a way to make up for the size difference.

It turned out that a lot of the definitions contained pointer types but were exposing these as "long" types. By adding an explicit pointer type to the definition format and adjusting all of the definitions that contained pointers (a lot of them), we were able to determine the size of the data we would load into memory. It would, however, not match the memory layout, so we added a simple mechanism to shift all the data around.

This may sound inefficient, but this was only the case when we were dealing with individual assets. From there, we used a 64-bit version of the tools to generate new map files (all the data ordered and packed together) that could, again, be loaded directly into memory in both 32 and 64-bit layout.

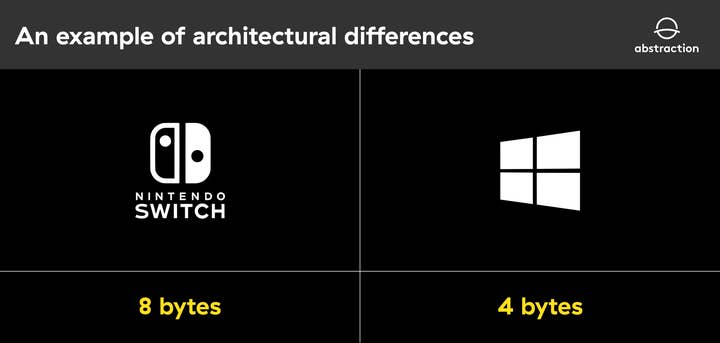

Moving to other platforms such as Switch, we ran into architectural differences where a "long" is 4 bytes on Windows, but 8 bytes on Switch. The game also had an early implementation of "Unicode", relying on "wchar_t", which is 2 bytes on Windows and Xbox but 4 bytes on other systems. We solved this by replacing these types with fixed size ones, "int32_t" and "char16_t" respectively. Additionally, string operation functions were replaced to use char16_t variants.

- We have a visual

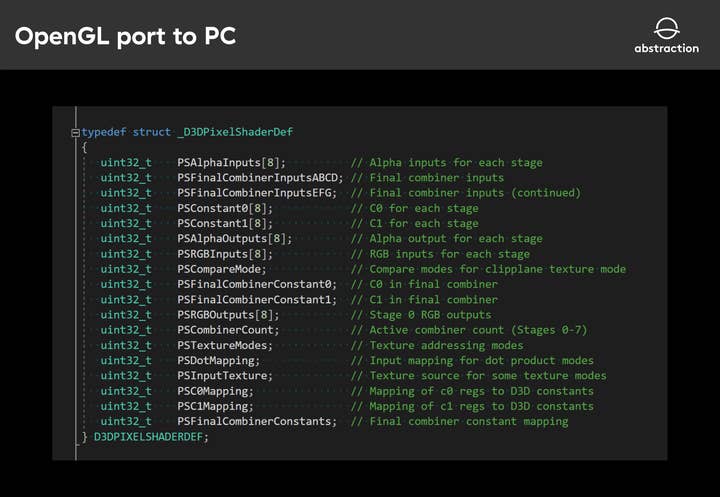

Iron Bear originally targeted a graphics API that featured a precursor to pixel shaders, namely, pixel combiners. These were utilized in a code-driven manner as opposed to the shaders we know today, which are more decoupled and somewhat data-driven. Luckily for us, an earlier OpenGL port to PC was available that translated these pixel combiners to OpenGL extension equivalents such as ARB shaders (a precursor to GLSL) and Nvidia Register Combiners.

For most ports, graphics are the largest chunk of initial work. To be able to parallelize work on the project as best as possible, you need something rendered on screen fast. This is where our internal platform abstraction layer Silverware comes in.

We have ported many games throughout the years, and this allowed us to build a framework to abstract away platform intricacies with a single API and interface. Porting the graphics over to this API theoretically means you only do the work once, and you have support for all platforms. Of course, each game is unique and does things in its own ways, so Silverware is constantly being updated to account for these cases and to generally adopt an increasing variety of calling characteristics.

While the aforementioned parts were being ported, other members of the team could work on infrastructural setup tasks, like build server integration.

- Input, audio, saving, etc.

As the original game was already running on PC, we were able to swap out all the other subsystems with Silverware as well. The amount of work for smaller systems such as input, audio, and saving fully depends on how the game code makes use of them.

For Iron Bear, saving also turned out to be plain memory dumps, relying on deterministic memory addresses, which became an issue only after we started porting to consoles. Luckily all of the data that was saved made use of just three container storage structures. This allowed us to implement a small system that patched the pointers present in those structures. In this case, what seemed like a daunting task at first, turned out to be trivial.

With all systems ported over to Silverware, we moved on to tailoring and getting the product ready for certification.

Adding support for the PS4 was one of the last things on the checklist, but it was a breeze, since Silverware already had support for it.

4. Reverse engineering or recreating an engine or framework

Sometimes a game is built with technology that cannot be reused on other platforms at all. For example, this can be because the technology doesn't support the platform, and the source code to that technology is not available. One such example is games made with GameMaker. Whereas nowadays GameMaker Studio allows developers to target multiple platforms, its older versions were limited to Windows, so bringing the game to other platforms, meant different measures had to be taken as we will describe below.

- "GameBaker" | Adrian Zdanowicz (programmer)

When Hotline Miami was released on PC in 2012, it was developed with GameMaker 7. Since realistically recreating the entire game accurately from scratch was not feasible, for the console versions, we came up with a framework called "GameBaker". The core concept behind GameBaker was to keep the original assets and game logic code written in GameMaker Language and convert them to generic formats and C++ code, respectively.

Logically, GameBaker consists of two workflows. First, a set of tools to process the game's GMK file, extract its assets and their metadata, and automatically convert GML scripts to C++. At this point, we adapt assets to platform-specific formats and give them an optimal layout -- for example, sprites are combined into atlases whenever possible, and sound files are converted to formats specific to a platform to avoid runtime conversion.

Once the assets and scripts are ready, the rest of the workflow is like a "traditional" game.

To support the files and scripts we converted, the second part -- or "backbone"- of GameBaker consists of carefully reverse-engineered systems that mirror the behavior of GameMaker run-time as closely as possible. At this point, the majority of the system functions available to games from GML have been reimplemented, and systems emulating GameMaker's rendering, and collision logic have been put in place. Note that we also had to carefully replicate some implementation errors from the original technology. The result is a game that plays identically to the GM original.

With this approach, GameMaker is reduced to the role of an "editor tool"

GameBaker has evolved over the years with every game it was used on, and the graphics engine saw the biggest changes. For the initial console releases of Hotline Miami, GameBaker integrated with Phyre Engine, which later was also used in the upgraded PC release. We were then asked to replace Dennator/Devolver's original GameMaker 7 version on Steam with our own GameBaker for better run-time performance and improved stability. For Hotline Miami 2, we even ended up replacing Phyre Engine with Silverware to lower any remaining friction in after-sales maintenance.

With this approach, GameMaker is reduced to the role of an "editor tool". While this has not been exercised much with the first Hotline Miami -- as we were essentially working on a finished game -- this has changed fundamentally with Hotline Miami 2: Wrong Number since we co-developed it with Dennaton Games from the start. Since GameBaker did not feature any user-friendly editors, the game was developed by Dennaton in GameMaker, and regularly received updates to convert to our technology, allowing it to run on multiple platforms.

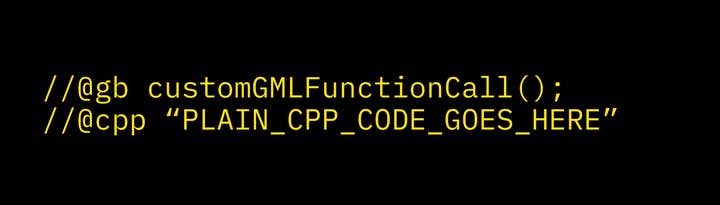

So what about the various features that GameMaker did not support out of the box, such as achievements or Steam Workshop used for the Level Editor in Hotline Miami 2? To accommodate for such additions, we developed our own GML syntax extensions and custom system functions. Due to the iterative nature of development, it was crucial not to break the GameMaker version of the game at any point -- therefore, our GML extensions came in the form of:

This way, as far as GameMaker's GML interpreter was concerned, these additions were nothing more than comments and were ignored -- while GameBaker's GML -> C++ converter recognized them. With this system in place, we were then free to implement custom additions without the need for modifying the autogenerated C++ code.

5. Recreating an entire platform

Sometimes, even though the source code of the game and its underlying technology is fully available, it still cannot be used as is. This is commonly due to the source code being specifically written for the original hardware and that hardware being just way too different from the target platform.

- The Chaos Engine, adapting a game from a classic platform | Wilco Schroo (lead programmer)

Bringing the Chaos Engine, an Amiga 500 game that's almost three decades old, to PC platforms came with its own unique set of challenges. The goal was to have the original game running on PC platforms and add additional features like multiplayer, achievements, and snapshot saving. We also added togglable enhancements to the game, such as a smoothing filter, a bloom filter, and 360 character movement for analog controllers.

The first challenge was running the game on PC. We had access to the original source material, but this was all Amiga assembly code that could not be simply built for PC. After researching what was possible, we decided that our best option would be to convert the original assembly code to C++ and build an application around it that simulated or emulated parts of the Amiga hardware, and provided extra functionality to the original game.

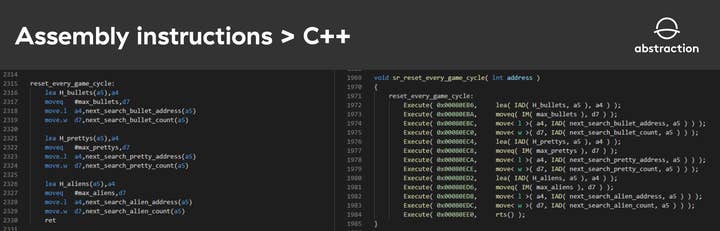

For the conversion, we built a tool that parsed the assembly and created C++ code from the assembly instructions. Effectively translating the assembly instructions to C++ function calls, as well as a few specializations that allowed us to map assembly instructions to existing C++ functionality. Since we wanted to make most of the changes to the game in the original assembly code, we made sure that the toolchain was fully automated and that we didn't have to manually alter the C++ code.

After generating the C++ code, the first thing we had to do was build a 68k CPU simulator that would simulate the CPU to such a degree that the game was able to run from the generated C++ code. For this, we had to rebuild the CPU state and state management in code and implement every assembly instruction according to the specifications of the CPU. We also had to write simulators for the audio, file I/O, blitter, copper, and keyboard/joystick input. With all of this in place, we were able to play the original game on a PC.

With the game running on PC, we could start adding features and enhancements. Thanks to the approach we had taken, we could easily add all the new features and enhancements since we essentially had full control over everything. Having everything in C++ also allowed us to debug the game, just like any other PC application.

That's it for the third article of the series. As we have hopefully illustrated, straightforward ports are rarely as simple as their name suggests. Games are as complex and unique as the people making them, so building a process that covers all the bases takes time and experience. Abstraction has made it its goal to find creative solutions to difficult problems, so join us again in a couple of weeks when we discuss tailoring: how to make an adaptation really stand out.

If you would like to find out any further information or just have a chat then please contact us here.

To read the first article of Abstraction's 'black art of platform conversion' series, dedicated to the technical assessment, click here. To read the second article, dedicated to the other approaches to the (not so) straightforward port, click here. Lastly, you can read about Abstraction's approach to the tailored port on this page.

Authors: Ralph Egas (CEO of Abstraction), Erik Bastianen (CTO of Abstraction), Wouter van Dongen (lead programmer), Adrian Zdanowicz (programmer), Savvas Lampoudis (senior game designer), Wilco Schroo (lead programmer)