Performance capture in the era of remote working

The GamesIndustry.biz Academy explores the challenges of remote performance capture -- and how to overcome them

In the past few months, as the COVID-19 pandemic took over even trivial aspects of our daily lives, the games industry did its best to carry on as usual. But many areas in games were just not able to progress as efficiently without the convenience of a shared space. Performance capture is one of them.

In normal circumstances, developers who have performance capture needs either have an in-house studio, or work on the premises of their external partners -- neither of which is feasible while remote working and social distancing.

"Motion capture has been a challenge for us," says Webb Pickersgill, performance director at Deck Nine Games. "We typically have a reasonably-sized capture stage, about 20 feet by 20 feet, in our studio outside of Denver, Colorado. So when we were asked by our leaders: 'How can we take this remote?', our brains exploded a little bit, because we had no idea how we were going to do it."

After a month of trial and error, Deck Nine put together a hardware kit that would allow for performance capture from home, and shipped it to its lead actor.

"It took a lot of time, especially with the virus, to ship materials," Pickersgill adds. "Just getting the equipment in was most of that time, honestly. We're now shooting in our actor's basement, which is very small -- less than ten feet by 12 feet -- and a large portion of one wall is just equipment, cameras and lighting."

"We're doing things that we never would have considered to do on a regular mo-cap stage"

Chad Gleason, Deck Nine

Chad Gleason, innovation technology director at Deck Nine, continues: "I think when we first went into it, there was this question of: are we going to have to completely radically change aspects of our production as a result of this? But we very quickly established that this was what we had to do, or we wouldn't finish. There was this 'do or die' attitude and it just opened up the playbook. Things that we wouldn't ordinarily consider, things that we would be relatively close-minded about... All that went out the window. We're doing things that we never would have considered to do on a regular mo-cap stage."

From how you can build a hardware kit and which software you can use, to how to work with your actors remotely and run a session with a skeleton crew, this guide will explore the many facets of performance capture in the era of remote working.

The various approaches of remote performance capture

When the province of Ontario declared a state of emergency at the end of March, Ubisoft Toronto had to close its doors and find ways for production to move forward remotely.

"We increased the use of keyframing and the reuse of existing motion capture data," performance capture director Tony Lomonaco says. "We have also done some major upgrades to our video systems to facilitate remote communication on the floor. The combination of those methods allowed us to keep our teams safe, and production on track."

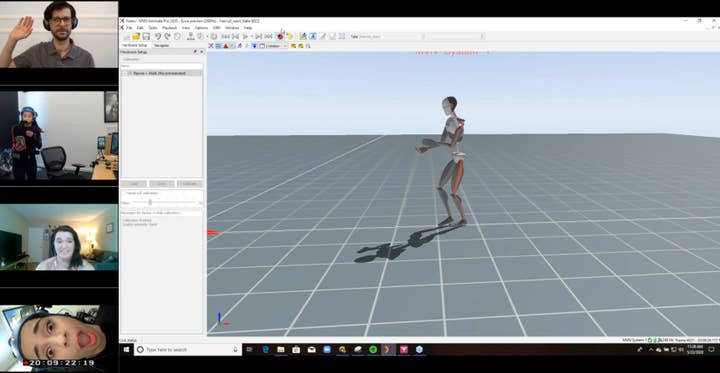

Keyframing was also an alternative Deck Nine considered, but the Life is Strange: Before the Storm developer ended up settling on a portable hardware kit (see picture below).

"This is a picture of the rack -- which is actually two racks -- that we decided to design," Pickersgill says. "Weight was one consideration. Just being able to have an actor be able to carry these cases by themselves and put them into a space was a consideration. So we chose to break it into an audio specific case and a mo-cap specific case. It's easier for transport, but it also allowed us to split out our audio-only package."

Deck Nine wanted to be able to split the kit into two distinct audio and motion capture packages, because it started doing remote voiceover before moving on to motion capture.

"So just half of this kit went to a couple of actors, who captured VO sessions, before it rejoined with its sibling, the mo-cap kit, to arrive at our lead actor's place," Pickersgill explains.

"Being able to have an actor carry these cases by themselves and put them into a space was a consideration"

Webb Pickersgill, Deck Nine

Before the pandemic, Deck Nine relied on Vicon's optical systems for its performance capture. If you're unsure about the terminologies and the different types of capture systems usually available, you can refer to Epic Games' white paper on the topic, which explains that "an optical system works with cameras that 'see' markers placed on the actor's body and calculate each marker's 3D position many times per second."

It requires multiple tracking cameras to use, so optical systems are usually quite expensive and, more importantly, not suitable for performance capture from home.

"Obviously an optical system wasn't going to work out, so we decided to leverage the Xsens system which doesn't require cameras," says Gleason. "[We use] Faceware to do all the face capture and that whole system turned out to be quite portable. We're also employing the Manus VR gloves for finger capture because it interfaces directly with the Xsens system and their software, so we were able to get up and running really quickly.

"So those are the core technologies we're employing for [performance capture from home] and it's worked out incredibly well."

The main considerations to take into account for Deck Nine were: how to make it less technically invasive for the actor? Can the actor suit themselves up? Is the software compatible with Deck Nine's own software?

For reference, you can find the list of the main equipment Deck Nine ended up using for its portable solution in this pdf, as well as extra resources (we'll touch upon software more in-depth below). Deck Nine owned about half of that equipment before the pandemic started, and had to buy the other half, Pickersgill says.

"We had the AJA recorder, it was part of our current in-studio setup and we had some converter boxes and timecode boxes. Some of the pieces that we didn't have were the cases and the rack mounting equipment, and some of the internal wiring. We needed really short wires instead of these gargantuan wires in order to wire things up properly.

"In some cases we were already making use of some equipment, so we had to order back up units, like an audio interface device for example. We had laptops, but we needed dedicated laptops for this so we had to order those special. In that case, we ordered one laptop for the mo-cap case and one laptop for our audio case, so they can operate as independent solutions."

Finding the right remote capture software

In terms of software, outside of what will often be provided alongside the hardware, you can easily find freeware that will help with your remote performance capture needs.

"We looked at remote control software, because you need to be able to remote control the laptop," Pickersgill says. "We don't have our actors operate the laptop -- they turn it on and walk away. We looked at various [remote control options] and they're all dead expensive. We ended up going with Chrome Remote Desktop, of all things, because it allows you to go through firewalls without any special configuration, so I can remote control a laptop in somebody's home. And not to mention it's free."

"We went full circle and came back to Zoom, like everybody else did"

Webb Pickersgill, Deck Nine

Deck Nine also looked at various video streaming options, as the team needed to be able to see through the remote cameras.

"We went full circle and came back to Zoom, like everybody else did," Pickersgill says. "Zoom offers a video stream, [thanks to] which I can see my actor and hear them in full quality, so I know I'm getting the performance, but it also allows us to share a screen at the same time, so that I can see our set up and I can see if we're rolling. So I can see if technically everything's working before I call action."

Another important piece of software for the team is Reaper, which relates more to the audio side of performance capture.

"Reaper is an audio tool for recording audio -- ReaStream is a built-in plugin into Reaper and it allows you to stream the full high-quality audio through a VPN. So we stream our audio, high quality, from our basement in Colorado to our audio engineer in Michigan, using Reaper and ReaStream.

"The other thing we also use is called Source Connect. It's another audio tool for streaming real time video. We use that in some circumstances, but it's the same thing, it allows you to hear full quality. It's like a Zoom call but high quality, and you can actually record high quality [audio] over it."

You can find some more resources about software in the pdf file listing the equipment Deck Nine used.

How to work with actors remotely

However, the challenges of doing performance capture from home don't stop when the kit is built and the software mastered. It also fundamentally changes the way you work with your actors, from the moment you cast them.

"We had the ability to continue casting through remote auditions via video conference as well as through self-tape submissions, which was a method that the industry was already using," says Angela Bottis, casting production manager at Ubisoft Toronto. "For teams that are looking to cast talent for their games during this time, I would recommend providing detailed and well thought-out safety guidelines for the proposed production in advance. This is what we have been doing at Ubisoft Toronto, and it lets talent and agents know that they'll be working with a team that's prioritising everyone's health and safety."

"Let talent and agents know that they'll be working with a team that's prioritising health and safety"

Angela Bottis, Ubisoft Toronto

Unless you can afford to create multiple hardware kits, you also may have to ask your actors to perform more than one role.

"It was a big endeavour to set [the kit] up and we had to spend a lot of time working with that actor to let them know that, not only are you going to be performing your own role, but probably the roles of several other actors," Gleason says. "We are doing voiceover work with a lot of the other actors on the project, because it's a lot easier to get just a VO kit over to somebody else's residence, but the actual motion capture then would be recaptured by this one actor."

This is commonly called "recap," Pickersgill continues.

"So we have our actor that is in the basement. They do full performance capture, which is face, body and voice at the same time, in the sound controlled environment. But we also have situations where [other actors] record their audio at their home, through a remote session. We then send that audio to our lead actor. They rehearse it, basically -- it's like Automated Dialog Replacement, if you're familiar with filming video. They learn the cadence of the voice and once they've got it, they basically lip sync the VO performance and re-perform it physically with their face. We have to do a hand of content that way.

"It's probably a good option for some people, if you have somebody that is a really good mimic, who can reproduce somebody's cadence of speaking and everything really accurately. Using a Faceware kit and an Xsens suite -- even me, at home, even though I'm not an actor -- I can re-perform a VO and it goes in the game. It's another way of capturing content that we've adapted to and I think other people could adapt to as well, and it could be very beneficial."

Ubisoft Toronto's approach to working with its actors was to capture facial performance only, and then use that as an animation reference.

"We were able to capture facial performance in talents' own homes by having them record themselves using a stationary camera," Lomonaco explains. "To do this, the talent lip synched to existing dialogue tracks so that we could accurately capture facial performance. We would then use that facial performance as the starting point for our animation."

You'll obviously need to take the time to train your actors so they are aware of how the whole setup operates, and you need to make sure everything is functional ahead of the capture session.

"Previsualisation and rehearsals definitely beat reshooting a poorly planned shoot"

Tony Lomonaco, Ubisoft Toronto

"A best practice that we've adopted is making sure to schedule technical tests prior to session recordings," Bottis says. "In the instances where we're delivering equipment to talent -- cameras, microphones, etc. -- we want to make sure their setups are functional in advance. Once our technical setup is running smoothly, the next challenge becomes maintaining a good communication rhythm between the talent, director, and crew."

Lomonaco adds: "Whether you're at your studio or working from home, planning and rehearsals are a must when preparing performance capture shoots. The use of previsualisation and rehearsals are critical to success, it helps you maximise your time when on set -- it definitely beats reshooting a poorly planned shoot. For post-production, edit your reference videos from the shoot, lock in the timing, and only order the data you need."

And then, of course, you have to direct your actors remotely.

"The general vibe of physically being on set is a challenge to recreate working from home," Lomonaco says. "The ability to interact with talent face-to-face definitely has its advantages, the same way it does when you are physically among your colleagues."

Ubisoft Toronto has now slowly started to resume production following strict safety protocols, but even so the studio has to plan scenes differently to maintain safe social distancing, which is something to keep in mind.

"For example, we're capturing actors further apart from each other than we typically would, but then we adjust that distance and eyelines in animation," Lomonaco says. "Because of this, scenes are also taking longer to shoot, so we're building in extra time to get what we need."

While working with actors remotely is a challenge, it's not insurmountable. And there is at least one silver lining to the whole situation.

"Generally speaking, on large productions, actor availability is a very big limiting factor," Gleason says. "We've got a schedule and we want to maintain it, but we need to bring in three people from three different states and they aren't available next month, so I guess we just won't shoot that content next month.

"This endeavour has got the gears turning a little bit in terms of: well, if you can't come to the mo-cap stage, maybe the mo-cap stage can come to you. And that's something we are seriously considering as a possible lever we can pull to maintain the schedule going forward."

Communicating efficiently and with the right crew

Deck Nine cut the number of people working on performance capture while being remote, but it's worth noting that it's an effort that still requires a hefty team.

"We [would usually] have about ten, sometimes eleven, people that can be on our mo-cap crew stage at any one time," Pickersgill says. "Our crew now consists of the actor, a performance director, a system director, an audio engineer, a single capture technician that handles both face and body, and we often have a writer who comes and goes depending on the shoot. So it's still quite a large handful, considering it's a remote shooting.

"Having all those people's ears and eyes on this content is critical, but one of the drawbacks that came with it was communication. When you're in a mo-cap room, you can have five conversations of two people in corners of the room at the same time and still get things done. Whereas in a [remote capture] session you have one serial line of communication, which is the Zoom call that you're on, and only one person can speak at a time. If you want to have another conversation with somebody else, you have to wait and start that conversation. So obviously our communications needed to be very brief, clear and accurate to move things along as quickly as possible."

To make the communication smoother, don't underestimate the advantage of having strong external collaborators to help with the technical side of things.

"If you are going to go and do an endeavour like this, find good partners," Gleason says. "We went from never having touched an Xsens suit to deploying it in a [remote capture] situation [in a few weeks]. We were like: okay, we want to work with this technology we've never worked with, and we want to have someone who isn't qualified operate it, and set it up by themselves, in their home. What could go wrong, right?

"So to be able to actually be on the phone with Xsens and Faceware [so they can] help us realise this, was absolutely invaluable. I highly recommend finding good partners, it's critical."

"Communications needed to be very brief, clear and accurate to move things along as quickly as possible"

Webb Pickersgill, Deck Nine

Finding the perfect crew and the right partners may help you to discover new ways to work in the long term. Even once Ubisoft Toronto was able to resume its performance capture effort under strict guidelines, a number of collaborators remained remote workers, which led to new processes being implemented, for the best.

"Because we had drastically reduced physical team members on set, we increased the number of people involved remotely," Lomonaco says. "This has created a collaborative environment to share ideas and feedback from team members who are not always able to attend the performance capture session. Moving to remote work has given us the opportunity to implement additional best practices that will allow us to continue working from home when necessary, such as in the event of a snowstorm or issues with public transit."

The costs of remote performance capture

Performance capture has historically been a very expensive aspect of game development. However, new models are now emerging on both ends of the spectrum.

"Performance capture is as expensive as you want it to be," says Bradley Oleksy, capture manager at Ubisoft Toronto. "Piecing together small motion capture solutions can only get you so far. The use of AI is changing the playing field, but good captured data from high-end mo-cap systems train the AI data, so it is still valid. Some systems like 4D are emerging, but are not yet fully practical for our purposes due to the lack of tools and the amount of storage needed for processing."

Both Deck Nine and Ubisoft Toronto remained quite secretive about the exact cost of their remote performance capture efforts. But Remco Sikkema, senior marketing manager at Xsens, gives some details about the company's solutions: "Xsens motion capture starts at $12,000, but we have a special program for indie developers starting at $7,000. The investment depends on what hardware and software options you choose to best fit your specific situation."

Concerning Faceware, its various licences start at $179 per year. However, there are costs beyond the obvious aspects, Gleason points out.

"In a lot of ways the cost of motion capture isn't just the price tag of the kit"

Chad Gleason, Deck Nine

"I think in a lot of ways the cost of motion capture isn't just the price tag of the kit. You can hook up a suit and a face rig, and get data. And that's what's so exciting. But what do you do with that data, how can you manage so much data, how you get all these different people interfacing with it and doing what they need to do with it, still requires a bit of R&D.

"And that I think is more prohibitive than the gear itself. The gear is actually quite affordable and the results are really high quality, it's taking that next step and dealing with terabytes of data. Where do you put it, how do you manage it and how do you put it in the right hands?"

Pickersgill chimes in: "And how do you move it across the pipeline? We have actors in their home spaces, which have minimal upload speeds. And then you have gigabytes, terabytes of data that need to be uploaded to a company. So we're finding small things like that you don't imagine until you're actually in this situation.

"But what has been done now by larger companies, figuring out this home capture solution, is going to help pave the way. It's going to help show some configurations that work to people with maybe lower budgets."

Catarina Rodrigues, performance capture technician at Faceware, certainly believes that the current situation will improve the future accessibility of performance capture.

"When you have to figure out how to do something because it's something that's needed at that time, you just figure out how to do it at all costs. And it's interesting because I feel like it's only under these times that we realise our capability.

"Once studios do open up and everyone is free to go out and work in larger groups in one space, it makes me wonder how many people will continue working in this way, because we have become so well more adapted now. It does make me wonder how often will remote shoots become a standard even far into the production world."

The GamesIndustry.biz Academy guides to making games cover a wide range of topics, from finding the right game engine to applying for Video Games Tax Relief, to the best practices and design principles of VR development, or how to improve your world building. Have a browse.