FaceIt asking players to help train Minerva AI in fairly tackling toxicity

Upcoming Justice update will allow Counter-Strike community to review cases flagged by the machine learning tool

Esports tournament platform FaceIt is advancing plans for its Minerva AI, inviting gamers to help hone the community management tool's judgement when dealing with toxic behaviour.

The firm announced the early results of the initiative last month, and CEO and co-founder Niccolo Maisto reiterated the highlights on stage during a recent Google Cloud Customer Innovations Series event.

Within the first month of its use, the Minerva AI identified that 4% of all chat messages in Counter-Strike: Global Offensive matches played through FaceIt were toxic -- and these messages affected more than 40% of matches.

After six weeks, Minerva had issued 20,000 bans and 90,000 warnings, leading to a 20% reduction in toxic messages and 15% reduction in the number of matches affected by this behaviour.

Maisto told attendees the priority now is to take this success with chat messages and use it to find ways to automatically identify abuse in voice communications and even in-game behaviour, such as a player purposefully preventing a teammate from accomplishing something.

The solution FaceIt has proposed is to get the community involved in judging what does and doesn't count as toxic behaviour, since voice and behaviour are more open to contextual interpretation.

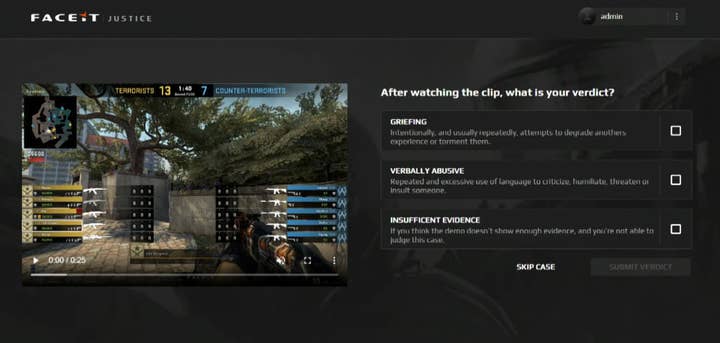

For the AI's Justice update, the company will launch an online portal where Counter-Strike players can review cases identified by Minerva. They will then be able to decide whether they think the behaviour in question is toxic or not, and suggest what sort of action would be an appropriate response.

Maisto said this will allow the community to "train the machine learning model with us" and address worries that an AI community manager will be too harsh with its punishments.

"One of the main concerns that our community expressed when we first announced Minerva was the fact that they didn't really understand who was setting the threshold, who was deciding whether behaviour was toxic or not," he said.

"It felt like it was a bit of a black box -- is it a machine ruling over behaviour? Is it a man behind the curtain playing judge on [people's] actions and making decisions on our behalf? In order to address this, what we decided to do is to do this with our community."