Nearly 26% of Discord user reports in back half of 2020 were acted upon

Harassment complaints acted upon 15% of the time, while extremist or violent content reports led to action 38% of the time

This week Discord released its transparency report for July to December 31, 2020.

The data it shared includes user-submitted concerns, actions taken and trends observed during the time.

Discord received 355,633 issues reported during the second half of the year. The number of reports trended upward over the entire year, peaking at 65,103 in December.

The company said that general harassment continues to be the most common issue on the platform. 37% of all user reports were due to harassment and the second most common was cybercrime at a reported 12%.

Discord shared data on the action rates of user reports that were submitted during the time.

As for how many of those reports were acted upon, Discord's data indicated almost 26% of all reports led to some form of action

While harassment was the most commonly reported problem, it was not the most commonly acted upon. Of the 132,817 user reports filed for harassment, Discord took action on 15% of them.

Discord acted more frequently on reports of doxxing (43%), exploitative content (43%), cybercrime (41%), and extremist or violent content (38%).

Discord noted the discrepancy between actions taken on different kinds of reports, saying it prioritized issues that "were most likely to cause damage in the real world."

These numbers stand in contrast to those Twitch released in its own transparency report last month, which indicated that less than 15% of user reports on the streaming platform led to action.

The only category of user report Twitch acted on more than 3% of the time was "Viewbotting, Spam, and Other Community" violations, which topped out at 26%.

"Trust & Safety uses the designation 'actioned' when they have confirmed a violation of our Community Guidelines and followed up with some action," Discord said.

"This can involve an account or server warning or deletion, a temporary account ban, a removal of content from the platform, or some other action that may not be immediately visible to the person submitting the report."

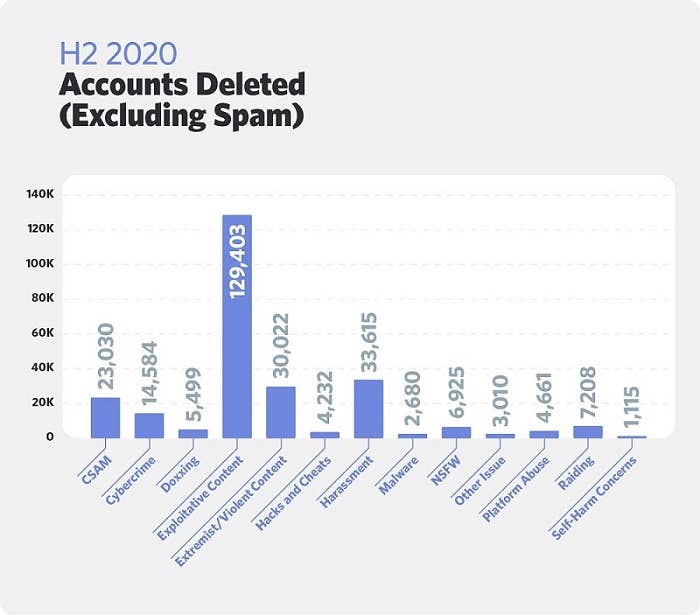

Just as there were disparities in how often different kinds of reports were acted upon, there were also disparities in how often those offenses led to the deletion of accounts.

Spam was far and away the leading reason for account deletion in the second half of 2020, with 3.26 million accounts axed for "spammy behavior." Exploitative content was the next most common reason, claiming 129,403 accounts, while harassment was third, resulting in 33,615 account deletions.

As for server deletion, 5,875 servers were taken down for being associated with cybercrime. The next most common reasons for servers being deleted were exploitative content (5,103) and harassment (3,463).