An introduction to how game development pipelines work

Room 8 Studio's 3D art director Maksim Makovsky details what a typical production pipeline can entail to newcomers

Sign up for the GI Daily here to get the biggest news straight to your inbox

Every video game starts with an idea that requires a lot of thinking and planning before it hits the drawing board. To bring even the simplest idea to life, it takes a team of designers, developers, and artists to see it through production.

The team responsible for designing and crafting all game ideas have different skill sets and responsibilities. Game design requires different abilities that must be precisely combined to produce a fun and exciting experience.

Designers imprint their artistic vision and creativity on all elements of game production. However, artistry alone is insufficient. A team needs to have clear goals and a way to ensure all the resources are appropriately allocated to create efficiency and coordination. Project managers must provide everyone with the best tools for their jobs.

The main challenge studios face is finding the perfect balance between creativity and efficiency

In other words, the main challenge studios face is finding the perfect balance between creativity and efficiency. There is no use in having a grand vision without having a production pipeline to make sure it sees the light of day.

This article provides an overview of a typical game development pipeline and how development tools are changing the design landscape. If you're a new studio head or producer, you should keep reading.

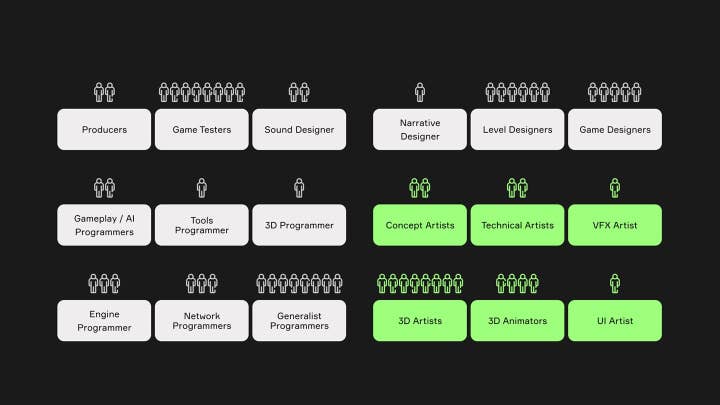

When talking to a video game designer or head of a studio about the process of game production, they will usually start by describing the team's composition and their different tasks.

Most studios are divided into departments with specific responsibilities and purposes to help build the final product without stepping on each other's toes.

This is what we call a production pipeline.

A pipeline can be visualized as a chain of production divided into different steps, each focused on one part of the final product.

We can divide it into three phases:

Pre-production

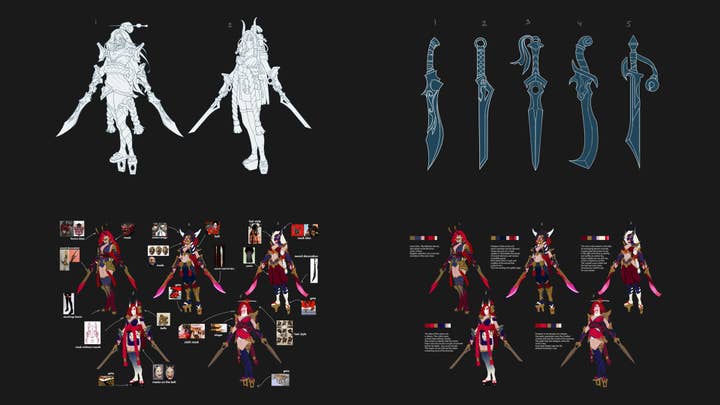

Here, a small team of artists and art directors get together to create concept art, character design, and storyboards. Ideally, team roles must be filled every time a new project starts.

The first role for consideration should be the director of operations. Under that position, we usually have design, animation, programming, and art departments. It could expand to include audio, writing, and QA, depending on the scope and size of the project.

During the pre-production phase, we clarify details like the game story, the gameplay style, the setting, the environment, the lore, the intended audience, and the platforms.

These elements will be extremely valuable when creating the first sketches for characters, props, locations, and weapons.

The main reason pre-production exists is to eliminate as much guesswork as possible in later stages of development. Here, we can experiment with different approaches, add or eliminate details, and try variations in color palettes. Changing any of those elements during later stages is time-consuming and tends to be very costly, so we try to wrap everything up during pre-production.

The main reason pre-production exists is to eliminate as much guesswork as possible

Then we go to the storyboard phase, which will show characters interacting with each other or the environment. Here, we imagine camera angles, transitions, lighting, and other effects. This will help the next stage, animatics, apply several camera effects, sounds to be used as reference, narration, and background music. This process helps start shaping the game and the story.

After experimenting with all these elements, we finally come to an agreement on the story’s narrative style and decide on the art style. At this point, the game acquires its personality which can range from photorealistic for added immersion, to pixel art, to cell-shaded animations for a more cartoonish feel.

When the first models, sketches, storyboards, and game style are all approved, they go to the next stage of the production pipeline.

Production

Production is where most of the game's assets are created. Typically, this is the most resource-demanding stage.

Modeling

During this stage, artists start translating a vision into assets that can be manipulated in later stages. Then, using specialized software, modelers interpret the sketches and ideas into 3D models. The 3D software arena today is dominated by Maya, 3DS Max, and Blender.

When designing props, the most obvious start is with vertices at the beginning of the modeling process. Then the vertices are joined with lines to form edges, then faces, polygons, and surfaces. This process is time consuming, and its complexity depends on how detailed we want our models to look.

One big breakthrough is the use of photogrammetry to generate hyper realistic models

We usually start with a simple 3D model, then add more details and wrinkles here through sculpting. The target platform will inform the detail level we want for a model; the more detailed the model, the higher the triangle count, and the more processing power it will need to generate.

One big breakthrough is the use of photogrammetry to generate hyper realistic models. These range from real-life objects to topographical surveys of whole areas, such as cities and racetracks, creating enhanced immersion. Games such as Forza Motorsports and Call of Duty: Modern Warfare have extensively used photogrammetry to bring real objects and scenarios to life and provide their players with realistic environments and props.

Photogrammetry also allows studios to generate truer-to-life visuals for a fraction of the cost it takes to manually sculpt assets. Many studios are switching towards this technology as it saves a lot of modeling and sculpting time, making the necessary investment worth it.

Regarding software, ZBrush allows us to sculpt or manipulate ultra-high resolution models for characters and props that can reach north of 30 million polygons. We can then retopo our models to specific polycounts depending on the technical requirements and target platform. This has made ZBrush the industry standard.

Many studios have leveraged the power of Autodesk 3Ds Max and Maya for over ten years, given their ease of use and pipeline integration features. But Blender offers a lot more flexibility for solo artists to implement custom solutions for their workflow without a problem.

Room 8 Studio chose to build several pipelines that accommodate specific projects and requirements.

Rigging

Here is where things get a little more technical. Riggers are responsible for giving 3D models a skeleton and articulating every part in a way that makes sense. Then they tie these bones to the surrounding geometry, making it easier for animators to move each section individually when needed.

They also build the controls that will govern the movement of characters in the form of automated scripts. This makes the process a lot easier and more efficient, as the same controls can also be used for other characters and projects.

Animation

Animators use appropriately rigged 3D models to create fluid motion and give characters life. Attention to detail is required during this stage, as every limb and muscle must move organically and believably.

The animation process has changed drastically over the years, especially after the adoption of non-linear pipelines.

After the animation is approved, it should be baked into a geometry format that separates every frame into individual poses that will be used for simulation and light.

Look

Here is where we apply texture and shading to all assets. Every object and surface need to obey a color palette. Things like skin color, clothing, and items are all painted in this stage.

We also apply textures to objects according to the style agreed upon during pre-production.

For physically based rendering (PBR), we can use a lot of tools. The Adobe Substance suite has proven to be incredibly versatile, making it a favorite even among big players in the animation industry such as Pixar.

Substance Designer, for example, allows us to create textures and materials with ease that can then be procedurally generated depending on the composition of the target mesh.

Substance Painter made it much easier to generate and apply textures to 3D objects, making the pipeline a lot more streamlined and allowing artists to import their deliverables directly into the game engine. It also offers terrific baking tools that give you the power to bake high poly meshes into your lowpoly mesh without losing their properties. That's a blessing when dealing with tight polygon budgets.

After we apply textures to our objects, we decide how they will interact with light sources, coupling the properties of the surface with the chosen textures and arriving at the final look.

Unreal Engine 5, one of the most powerful engines in our pipelines, features a render engine called Lumen. It allows for Global Illumination without having to bake light maps to provide accurate lighting. This was huge for artists as we no longer needed to deal with lightmap UVs and other tedious processes that would make the lighting process a drag.

For contrast, when crafting a location, we previously had to set up lightmaps and bake them into the scene so we could get global illumination, realistic shadows, and all those aspects that help create a believable setting. The process could take us whole days just to get the UV channels right.

Lumen also gives us a highly optimized way of creating dynamic lighting that covers both outdoor and indoor spaces because it switches between three tracing methods efficiently without becoming resource intensive.

Simulation

There are things that are too complex to animate by hand. Here is where the simulation department steps in. The randomness of water waves and ripples, or the effect of the wind and movement on textures and hair, are all programmed by simulators.

Today's technology and simulation algorithms allow for highly realistic motions for liquids, gases, fire, clothing, and even muscular mass on characters in action.

Assembly

In this stage, we put everything together to create the finalized product. Every asset is like a Lego piece that will serve as a building block for a level. Depending on the game engine used in the pipeline (Unreal, Unity, or other custom engines), we need to make sure asset integration occurs smoothly. Therefore, during the assembly process, it is essential to have a solid pipeline that allows us to have everything at hand and avoid any bottlenecks that might impact efficiency.

A game-changing aspect of the newer UE5 engine is the Nanite feature. This proprietary solution has drastically changed pipelines by allowing us to import and render high-polycount 3D models in real time without performance degradation.

The engine does that by converting assets into more efficient meshes that change dynamically depending on the distance from the camera. So, for example, triangles get smaller when the camera gets closer and vice-versa. This means that traditional LOD tinkering is no longer necessary.

Post-production

There are many aspects to the post-production phase, but the most significant is color correction and lighting, as they set the final tone for the game or trailer. Here is where we give the game its unique visual style by adding specific tints or filters to the picture. The iconic greenish shade used all throughout the Matrix movies was applied during the post-production stage.

But there are other factors that make post-production a critical phase. For example, profiling, measuring frame rate counts and frame times, making sure the polygon budget fits within the memory allocated by the target platform, and deciding which lights and shadows should be baked in or treated as dynamics.

In animation studios, the brunt of the resources traditionally goes into the production stages. This is in stark contrast with what happens in real-life studios where the cost of finishing a product has a heavier weight in the post-production stage.

For example, film directors enjoy greater freedom and flexibility during production stages than what is allowed for traditional animation studios, as a real-time feedback loop allows directors, actors, and crew to bring the studio's vision to life in a few shots.

Today, tools such as Unreal Engine 5 grants the same freedom to animators. Developers can select assets, characters and locations and move cameras and angles around, giving them the ability to visualize deliverables. If they want to have an idea of how an asset can be manipulated, they can do it directly through the engine in real time. This eliminates the previs-director gap by allowing the director to instantly manipulate assets and provide immediate feedback.

Moreover, we can now aggregate every asset into the engine, so the process moves freely between production stages. With non-linear pipelines, studios can even out expenditures and spread-out expenses more evenly between production stages.

No matter how far technology goes, without a talented and experienced team that understands how to leverage these tools

One of the main effects of this approach is that we can now dish out production-grade assets right from the pre-production stages and then propagate them through the entire pipeline without a problem.

In the last steps of this process, the quality assurance department performs functionality and compliance testing to make sure the product works as advertised and that it complies with the requirements imposed by the platform.

Studios today must put into place an agile pipeline system that leverages the power of the latest tools and technology trends to make sure the vision comes to life while remaining competitive. The switch towards non-linear pipelines and real-time engines is set to be a game changer, allowing even the smallest teams to compete with industry behemoths without missing a beat.

However, the expert use of ever more powerful design, animation, and development tools is still what makes the difference in this industry. No matter how far technology goes, without a talented and experienced team that understands how to leverage these tools and put together an efficient production chain, these tools can only get you so far.

Maksim Makovsky is the 3D division art director at Room 8 Studio. With ten years of experience in the video game industry, he has worked on some of the biggest titles in the business including Call of Duty, Control, Overkill's The Walking Dead, Warthunder, and World of Tanks.