Wii U blitzes PS3 and 360 as the "greenest" console

Digital Foundry assesses the power consumption of Nintendo's new home console

After a few weeks of hands-on time with Wii U, it's now pretty much confirmed that there is no revelatory increase in overall processing power compared to the Xbox 360 or PlayStation 3, but there is one technological element where Nintendo's design is leaps and bounds ahead of its vintage 2005/2006 competitors: efficiency. In terms of performance per watt, Wii U is the clear winner, providing an equivalent graphical and gameplay experience using less than half the power consumed by the other consoles.

"Wii U draws so little power in comparison to its rivals that its tiny casing still feels cool to the touch during intense gaming."

When the Wii U's casing and overall form factor was first revealed, we had genuine concerns that Nintendo could be heading for its own RROD challenge. Microsoft and Sony's woes with excessive heat are a matter of record: the process of heating up the main processors during gameplay and then cooling them down when the console was turned off caused the lead-free solder connections to eventually break over time, disabling the hardware. Since then the platform holders have shrunk the main processors, making them cooler, more efficient and less likely to fail, but the form factor of the units is still fairly large.

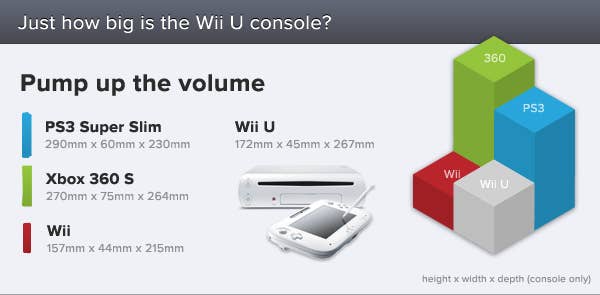

Initial Wii U tech demos showed that the hardware offered similar performance to the current-gen consoles, but the form factor of the machine was even smaller than the "slim" versions of its competitors. Indeed, Wii U is even smaller than the recently released PlayStation 3 "Super Slim". Wii U has a lot more ventilation than its predecessor, but the space available for a heatsink and fan is much smaller than that of both Microsoft and Sony's offerings.

Nintendo's own tear-down of the Wii U provided some answers: CPU and GPU were bonded together into a single assembly, meaning that the concentration of heat was more localised and thus space could be saved on the cooling set-up. But despite this, overall heat would surely remain an issue - to the point where Microsoft actually went one step further with its 360S, integrating CPU and GPU into one piece of silicon.

"Nintendo had the luxury of building its entire architecture from scratch rather than down-sizing older designs, resulting in a more efficient system"

IBM has previously confirmed that its Wii U CPU is fabricated on a similar 45nm process to the processors in the Xbox 360 and PS3, while we're pretty confident that the Wii U's Radeon graphics core is using the same 40nm process as the RSX chip in Sony's console. 28nm is the next step in reducing the physical size of the main chips, but the volume simply wasn't there to sustain a mainstream console launch accommodating millions of units when Wii U first began production. It's one of the principal reasons we need to wait until next year for the next generation Xbox and PlayStation.

The power draw figures in the tablet below demonstrate that we needn't have worried. Wii U is remarkably efficient to the point where you can barely feel the heat when you rest your hand against the casing - something we can't say about the 360S or even the new PlayStation 3 "Super Slim". Its overall power draw is actually lower than many laptops (in fact we wouldn't be surprised to see Wii U console battery pack attachments launching at some point for mobile gameplay) - a remarkable state of affairs considering that its gaming performance easily beats those same notebooks.

| Wii U | Xbox 360S | PS3 Super Slim | |

|---|---|---|---|

| Front-End | 32w | 67w | 66w |

| FIFA 13 Demo | 32w | 76.5w | 70w |

| Netflix HD | 29w | 65w | 62.5w |

We find that the Wii U is drawing around 32 watts of power during gameplay and despite running our entire library of software, we only ever saw an occasional spike just north of 33w. The new PS3 uses 118 per cent more juice under load, while our 2010 Xbox 360S was even less efficient, requiring 139 per cent more power for gameplay. We understand that Microsoft has revised the design of its console since it first launched, but the main CPU/GPU combo processor still uses the same 45nm process, so we don't expect to see any major game-changing efficiency gains. All consoles show a drop in power-draw when engaged in media playback (we tested an HD episode of Dexter streamed via Netflix), lower even than running the front-end menus of each device.

"Raw CPU processing power is an issue, but launch games like Mass Effect 3 show that Wii U is competitive against its current-gen competitors"

So how has Nintendo achieved such a comprehensive leap in performance per watt? Well, there's no such thing as a free lunch and there is some compromise. The Wii U's lack of CPU power compared to the Xbox 360 and PS3 is a topic that's been covered extensively before, but the main takeaway here is that a CPU running at just 1.24GHz and using far fewer transistors than the competition (the physical size of the chip is much smaller than both Xenon and Cell) is obviously going to consume far less power than its rivals. Gains in the efficiency of the design and the inclusion of features such as out-of-order execution will - to a certain degree - make up for the lack of dual hardware threads and the lower clock speed, but there is a dip in raw computational power that cross-platform devs are going to need to work around.

Regardless, we're still left with a significant difference in terms power consumption, which takes us into an area where we still know relatively little - the hardware make-up of the Wii U's Radeon GPU core. Integrating the fast work RAM - the eDRAM - directly onto the GPU core will have some benefits (it's a daughter die on Xbox 360, sitting next to the processor), but aside from that, details are light.

However, what we do know is that AMD crafted the Wii U graphics core by basing it on existing designs hailing from the 4xxx era, the so-called RV770 architecture. Looking into the genesis of this GPU line from its original PC release throws up some interesting tidbits: stream processors were more efficient than their predecessors, but most interesting of all, we discover that AMD was able to increase performance by 40 per cent per square millimetre of silicon - another big leap in efficiency.

One thing that did stand out from our Wii U power consumption testing - the uniformity of the results. No matter which retail games we tried, we still saw the same 32w result and only some occasional jumps higher to 33w. Those hoping for developers to "unlock" more Wii U processing power resulting in a bump higher are most likely going to be disappointed, as there's only a certain amount of variance in a console's "under load" power consumption. Also interesting were standby results from each console - 0.5w was consumed by all of them, but Xbox 360 and PS3 did spike a little higher periodically, presumably owing to background tasks that run even when the console is not operating.

Also interesting are the overall efficiency gains of the PS3 across its six-year lifespan - a good indication of how far we have come in shrinking the same core architecture across several generations of fabrication nodes. Based on our previous tests, the launch PlayStation 3 (90nm CPU and GPU) guzzled a phenomenal 195-209w during gameplay, while the initial Slim revision (45nm CPU and 65nm GPU) brought that down to 95-101w. The Super Slim features a 45nm CPU/40nm GPU combo and takes power usage under load to the 75-77w range. While we don't expect to see PS3 and Xbox 360 ever seriously challenging the Wii U's remarkably low power draw, a 22nm Cell is on the cards and it makes sense that the RSX will shrink further down to the same 28nm currently used by NVIDIA's more recent graphics processors. For its part, Xbox 360 should see notable efficiency gains in the future with a 32nm shrink for its integrated CPU/GPU chip...