The data is in and your review score still matters - EEDAR

"But the 80s might be the new 90s," says Patrick Walker, EEDAR's Head of Insights and Analytics

The past several years have seen significant change in the games industry; everything from who plays games to how and where those games are paid for and consumed has been shifting and diversifying. Just perusing the topics that have dominated recent headlines - games as a service, free-to-play, steam sales, casual mobile gamers, eSports, virtual reality - indicates how rapidly the games industry is evolving.

This dynamic pace of change has led many in the industry to question whether the average of review scores, surfaced by sites such as metacritic.com, is still a critical metric. Recently, the COO of Double Fine games, Justin Bailey, made headlines by stating that Metacritic "doesn't really matter, as far as sales of the game."

The importance of average review scores (i.e. Metacritic scores) as a measure of game quality has always been a subject of debate. Common criticisms of the metric argue that game quality can't be summarized in a single score and that the metric has been misused by publishers as a measuring stick for incentives and business decisions.

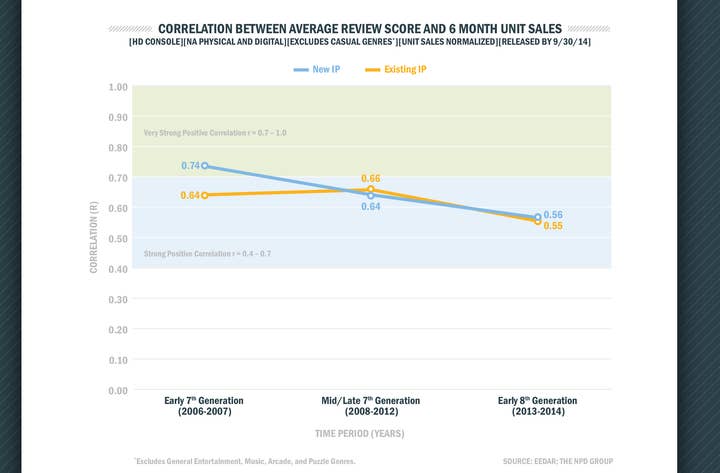

Historically, data has shown a strong relationship between console sales and average review score, especially early in a console cycle. While the causality of this relationship has been debated (Do people actually go to Metacritic.com, or are they just reading reviews on the bigger sites like IGN and Gamespot? Is the Metacritic score actually measuring a game's quality or some other aspect, like development budget or marketing?), it is generally agreed upon that a Metacritic score can provide insight into how well a game did financially. However, this is changing at the beginning of the 8th console generation.

"while the correlation between review scores and sales is still "strong", the 8th generation has seen a decline in the strength of the relationship compared to the early 7th Generation"

Several high-profile new IP releases, Destiny and Titanfall, have had massive financial success despite critical reception and average review scores that fell below industry expectations. A common theory is that there may be a growing disconnect between the features that actually drive sales and the features that critics focus on when reviewing a game (e.g. lack of endgame content in Destiny at launch, the lack of a single player campaign in Titanfall).

In order to investigate if there is data to support this growing skepticism, EEDAR conducted an analysis to investigate whether the relationship between sales and average review score is as strong at the beginning of the 8th generation as it was at the beginning of the 7th generation. EEDAR included titles in the analysis for which the relationship between review score and sales has been the strongest in the past, HD console titles (PS3, PS4, Xbox 360, Xbox One) in the core genres released through September 2014.

EEDAR compared the correlation between review score and sales success at the Beginning of the 7th generation, the Middle/late 7th generation, and the Early 8th generation. Sales success was measured as the normalized North America 6 Month physical and digital unit sales.

The data suggests that there is some validity to claims that review score is less critical to sales. The correlation between two sets of numbers is measured by Pearson's r, where a correlation above 0.7 is evaluated as very strong and a correlation between 0.4 and 0.6 is evaluated as moderately strong. In the beginning of the 7th generation, as consumers were encountering new kinds of games the correlation between reviews and sales performance was especially high for New IPs. Consumers trusted media sources to provide guidance on which games to buy. This relationship weakened as the generation continued, however, as consumers became more knowledgeable in the brands and experiences they enjoyed, and relied less on critical reception.

Interestingly, while the correlation between review scores and sales is still "strong", the 8th generation has seen a decline in the strength of the relationship compared to the early 7th Generation, despite new business models and experiences. EEDAR's theory behind this weakening relationship is that the rise of smartphones and social media has created an environment in which consumers are getting their information from a broader range of sources.

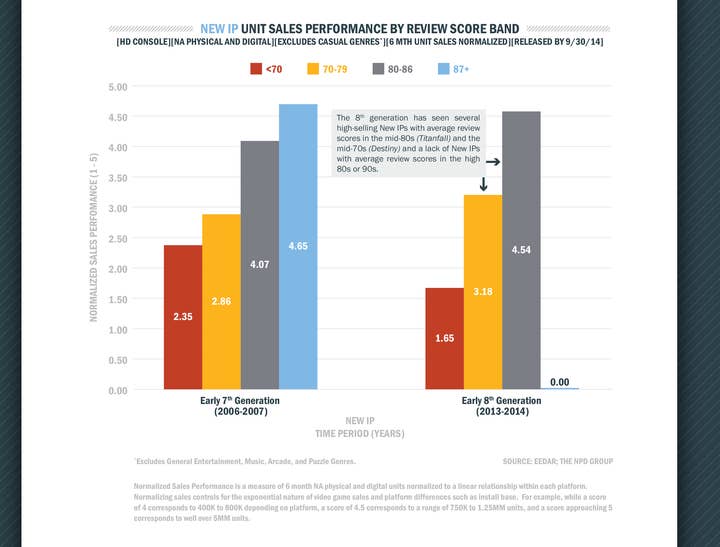

A deeper investigation into sales success at different review score ranges reveals the score ranges and game types for which review score might not be as critical to success as it was in the past. The graphs below show sales success normalized on a scale of 1 to 5. The first graph shows sales success for HD console titles in core genres at different average review score ranges for New IPs, comparing the early 7th generation to the early 8th generation.

The data reveals two important trends: First, New IP in the 8th generation that received moderately high review scores (70s and mid 80s) have outperformed titles in these review score ranges in the Early 7th generation. This data point is in line with the success of Destiny, which has an average review score in the mid-70s, and Titanfall, with an average review score in the mid-80s. Second, there has yet to be a new IP in the 8th generation with an average review score in the high 80s or 90s (87+) (The Last of Us: Remastered is considered an existing IP on PS4 by EEDAR). There is still a significant sales performance penalty against titles with review scores below 70, and it's a penalty stronger than in the early 7th generation.

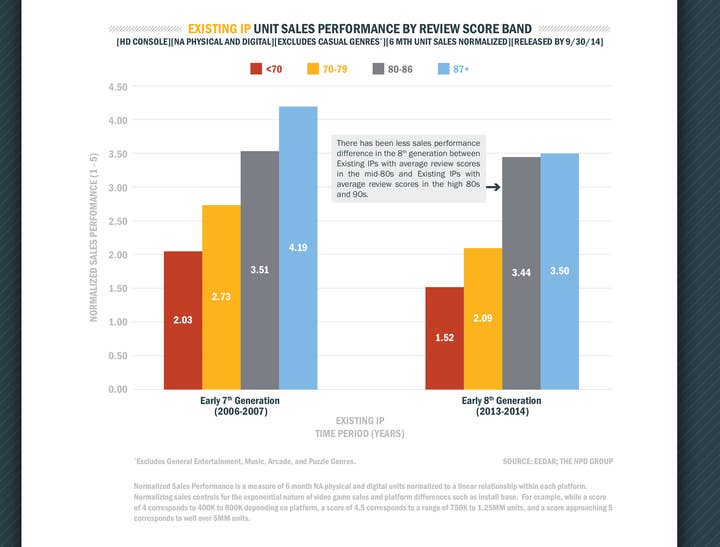

The second graph shows the same data for Existing IPs - sales success for HD console titles in core genres at different average review score ranges.

The data on the relationship between sales and average review score reveals a similar trend to the data on New IPs. While there is clearly a penalty for achieving a low review score for an Existing IP in the 60s or even the 70s, there is less of a strong relationship between achieving a breakout review score in the high 80s or 90s and breakout success. The sales performance score for 8th generation Existing IPs is approximately the same between games with an average review score between 80 and 86 and games with an average review score of over 87.

The data for both Existing and New IPs suggests a common theme - 80s are the new 90s. While achieving a breakout review score does not appear to be as critical as it used to be, it is still important to release an HD console game that reaches a certain threshold of critically-determined quality.

The broader point here is that the video game industry is changing rapidly and understanding game performance requires leveraging and combining a broader array of data sources. EEDAR believes that the average review score of a game is still important to predicting the sales success of a title on the HD consoles, and we leverage average review score heavily in our forecasting models to provide expert driven services that evaluate the quality of an unreleased game using the average review score framework. However, we also believe that implementing more sources of data is more important than ever. To this point, our models and services are heavily leveraging new data sources, such as social media data, in combination with more traditional approaches.

Note on methodology: EEDAR calculates Physical and Digital sales through a combination of sources, including point of sale data from a partnership with the NPD Group and EEDAR internal models for worldwide digital and physical sales based on data from the proprietary EEDAR database.